Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

An introduction to markov chains, a type of random process where the future state depends only on the current state. Markov chains are used to model various systems and make predictions about their future behavior. Key topics include the definition of markov chains, their key features, applications in weather forecasting, web navigation, and more.

Typology: Study notes

1 / 24

This page cannot be seen from the preview

Don't miss anything!

Suppose that X

depends only on X 7

, X^ depends only 66 f^

f f

on X^5 , X^ on X^5

, and so forth. In general, if for all i,j,n, 4

P(X^ n+

=^ j |x n

=^ i , x n

=^ n− 1 i ,... , xn−^1

=^ i ) = P(X 0 0

=^ j n+ |X^ =^ n^ i ),n

then this process is what we call a Markov chain

.

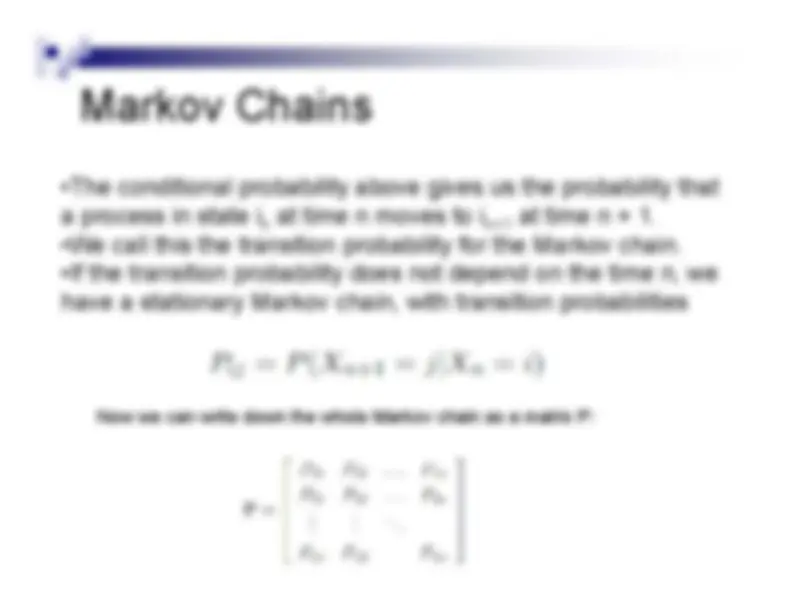

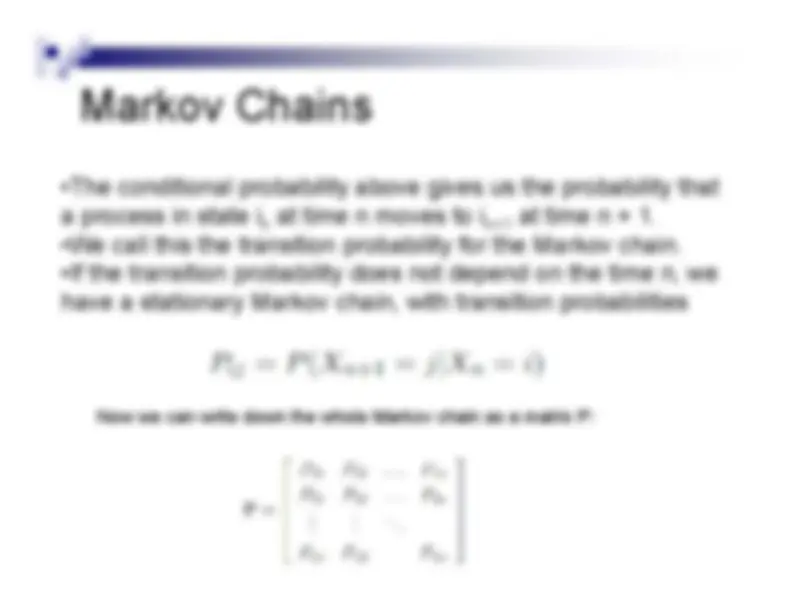

•The conditional probability above gives us the probability thatThe conditional probability above gives us the probability thata process in state i

at time n moves to in

at time n + 1.n+

•We call this the transition probability for the Markov chain.If th^

t^ iti

b bilit

d^

t d^

d^ th

ti

•If the transition probability does not depend on the time n, wehave a stationary Markov chain, with transition probabilities^ Now we can write down the whole Markov chain as a matrix P:Now we can write down the whole Markov chain as a matrix P:

th^

b bilit

t^ b

t^

i^ i^ th^

t ti

^ It^ is the probability to be at page

i^ in the stationary

distribution on the following Markov chain on all (known)webpages. If

N^ is the number of known webpages, and a p g^

p g

page^

i^ has^ ki links then it has transition probability^ f^ ll

th t^

li k d t

d

f^ ll

th t

for all pages that are linked to and

for all pages that

are not linked to. The parameter

α^ is taken to be about 0 85

^ The parameter

α^ is taken to be about 0.

The state of the system at time

t +1^ depends only

on the state of the system at time

t y

| Xx X xx X | Xx X^

tt t t t t t t^

^

1 1

1 (^11) 1

Pr

Pr^

X^1

X^2

X^3

X^4

X^5

Transition probabilities are independent of

time ( t

)

b| X

1 Pr^ t^

t^

ab

X^

b^ | X^

a^ p ^

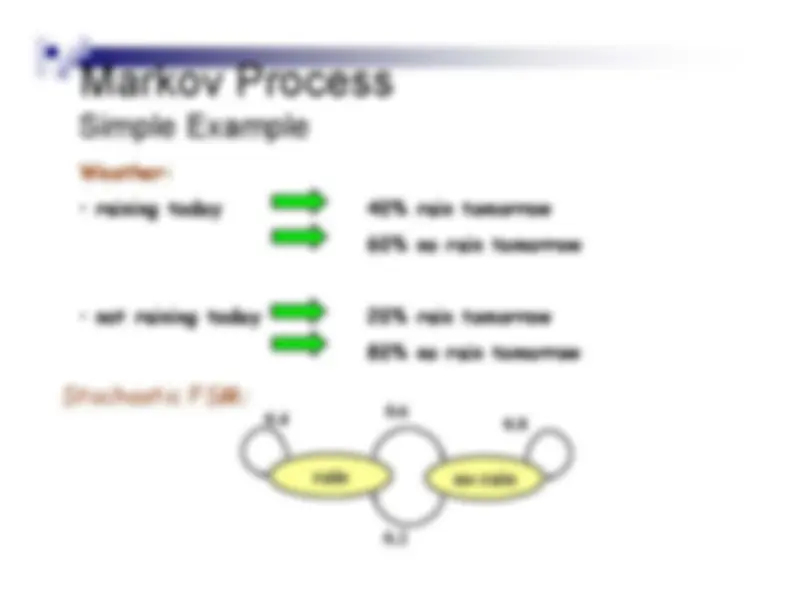

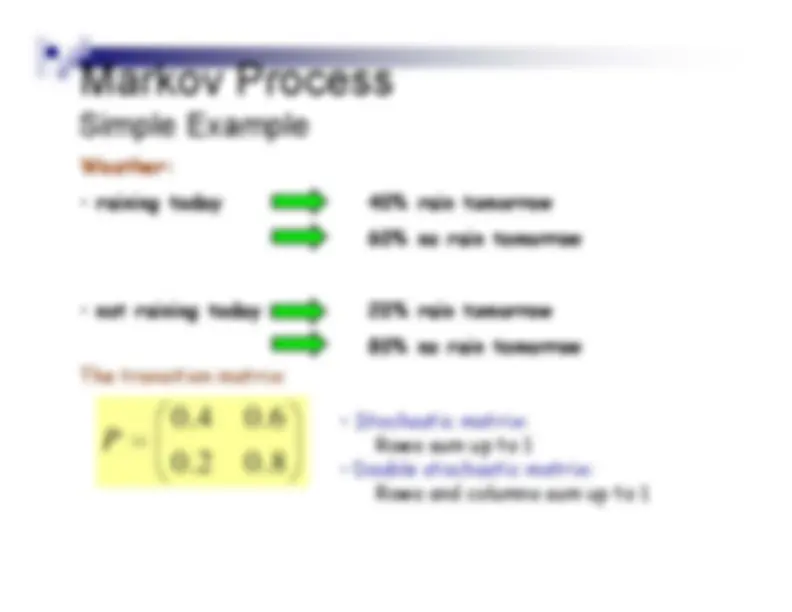

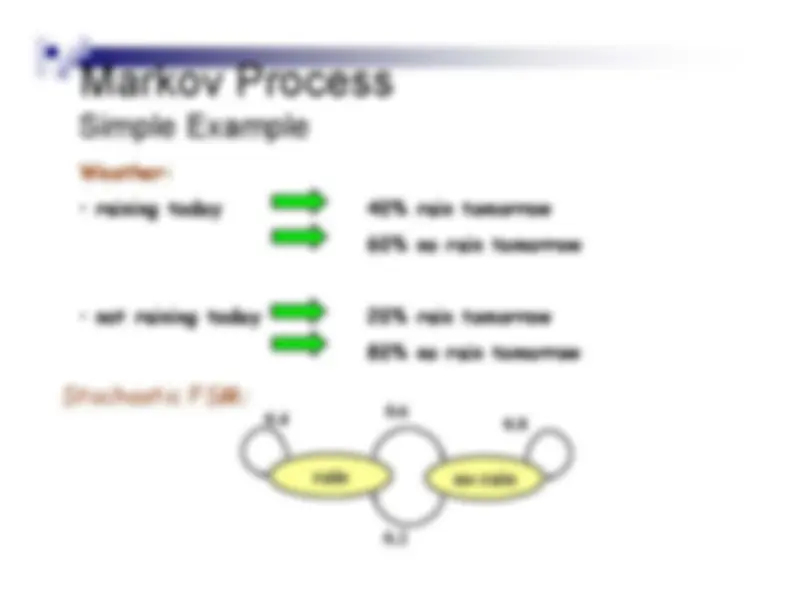

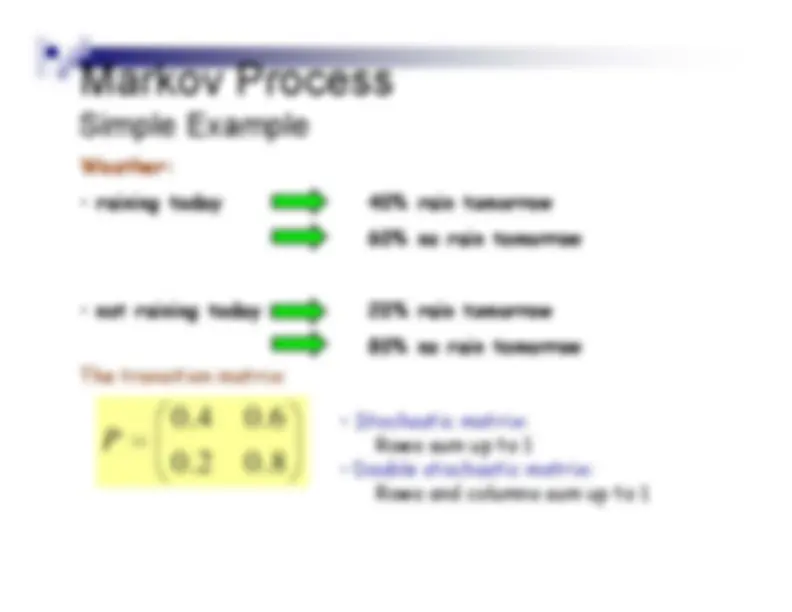

40% rain tomorrow60% no rain tomorrow

-^ not raining today

20% rain tomorrow80% no rain tomorrow80% no rain tomorrow

-^ Stochastic matrix:

The transition matrix:

-^ Stochastic matrix:^ Rows sum up to 1• Double stochastic matrix:Rows and columns sum up to 1Rows and columns sum up to 1

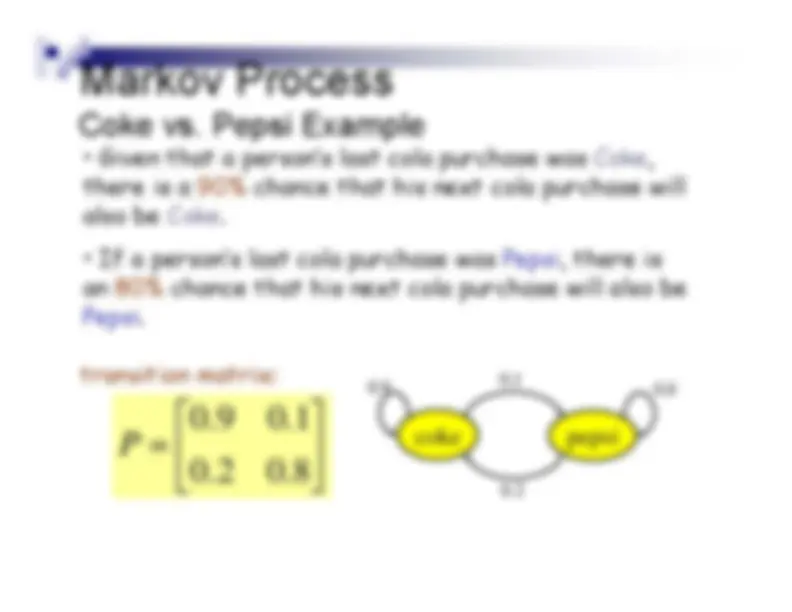

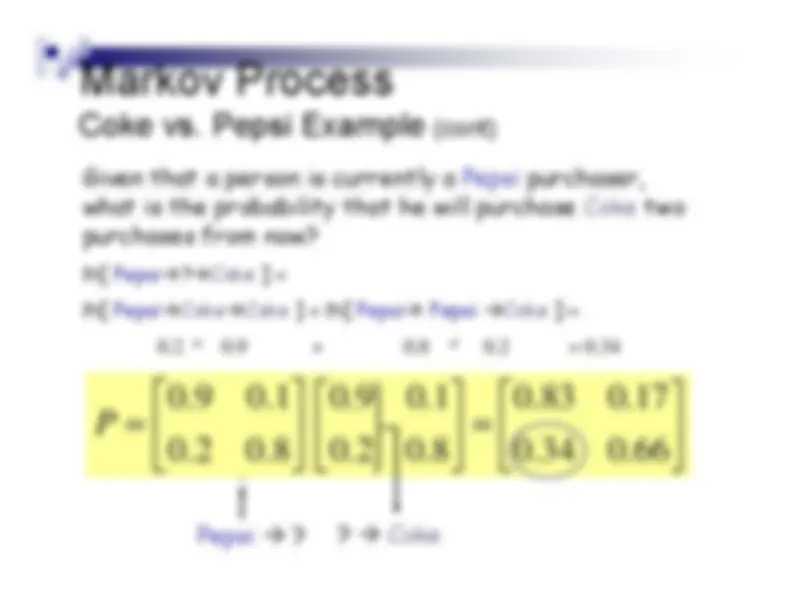

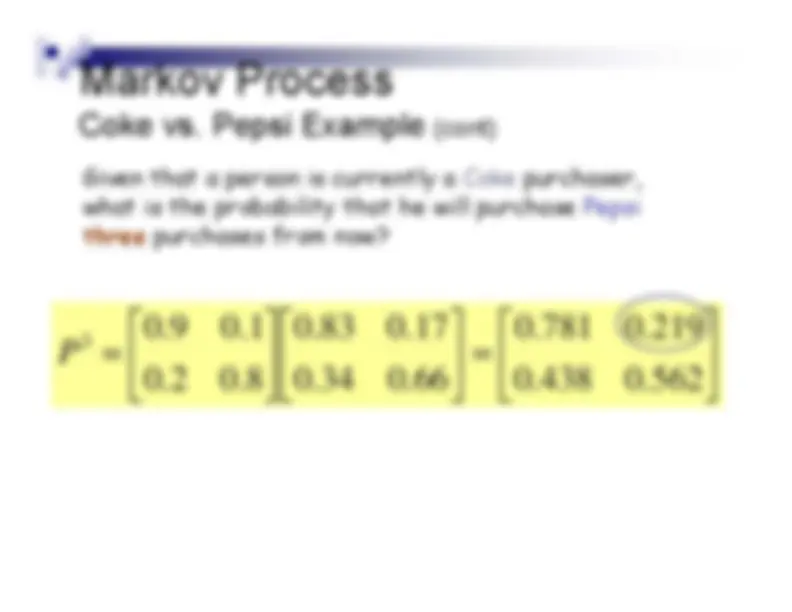

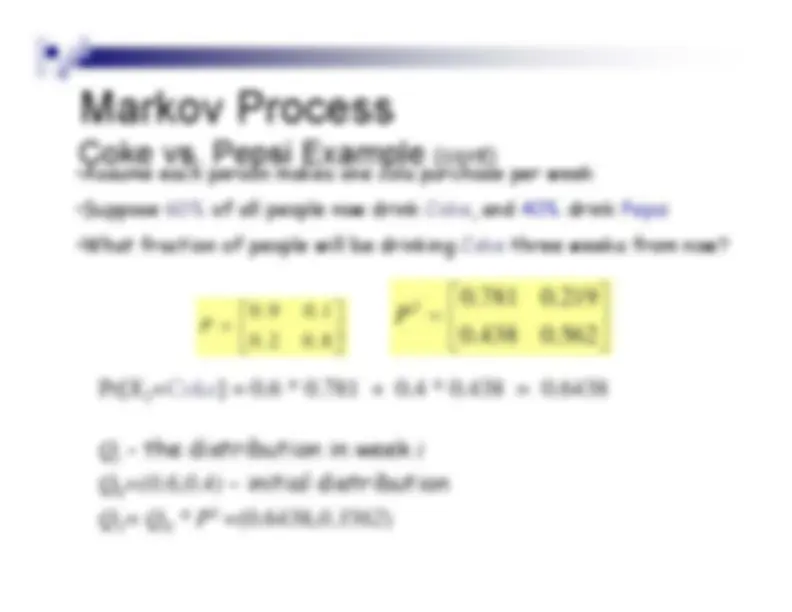

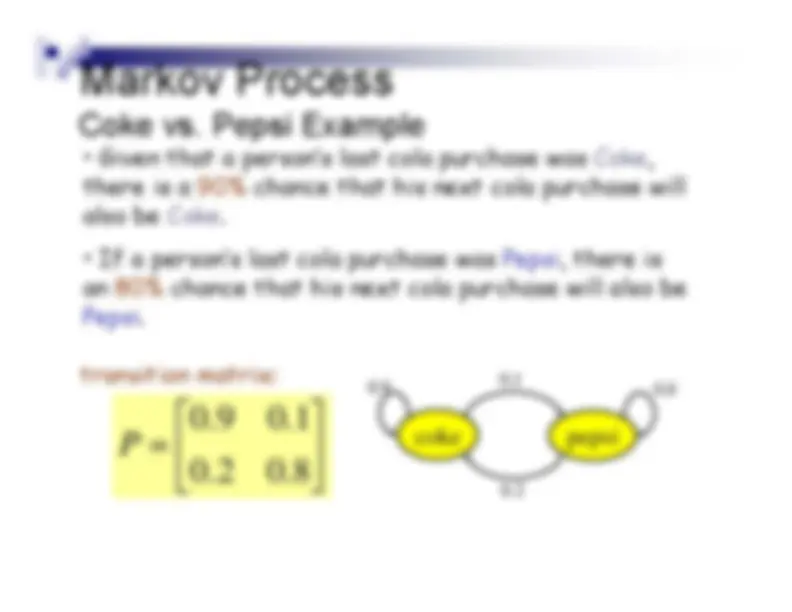

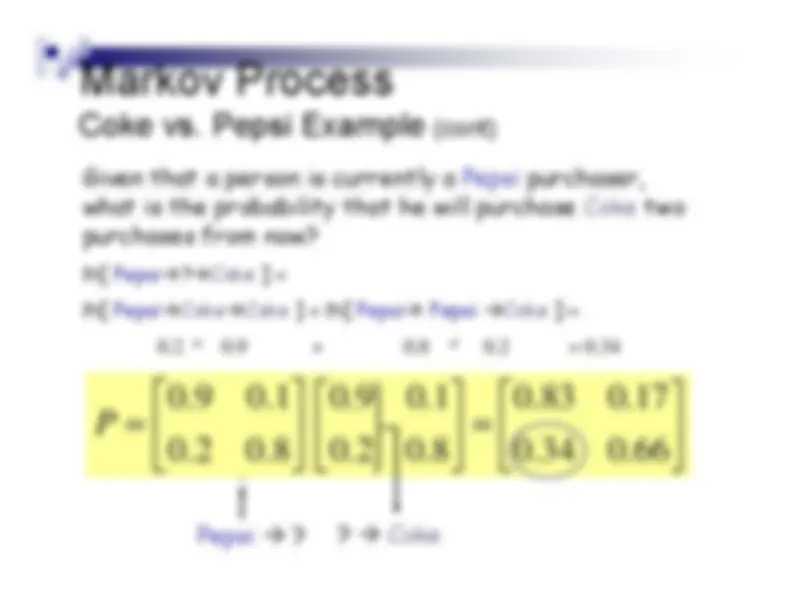

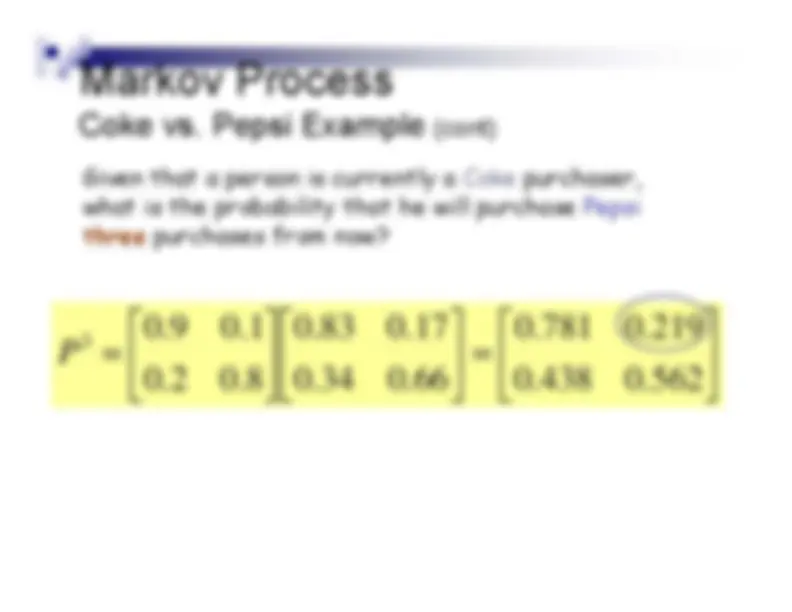

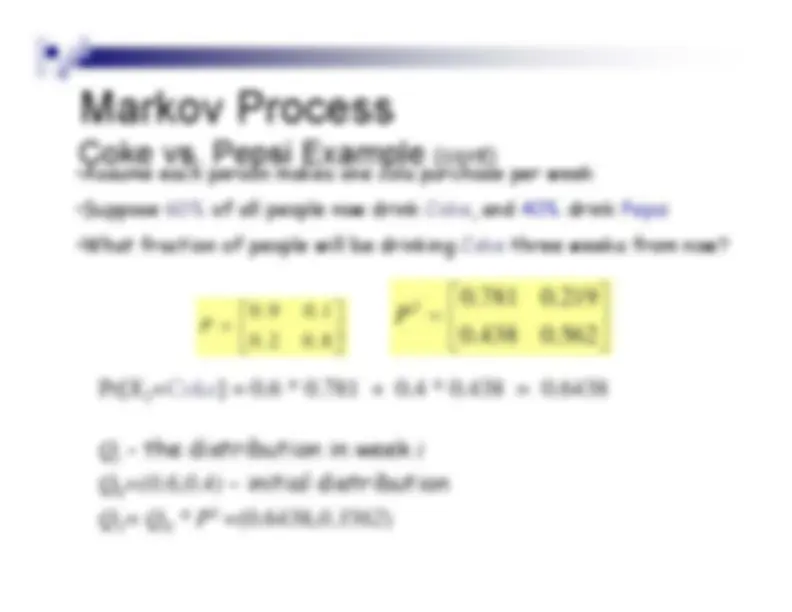

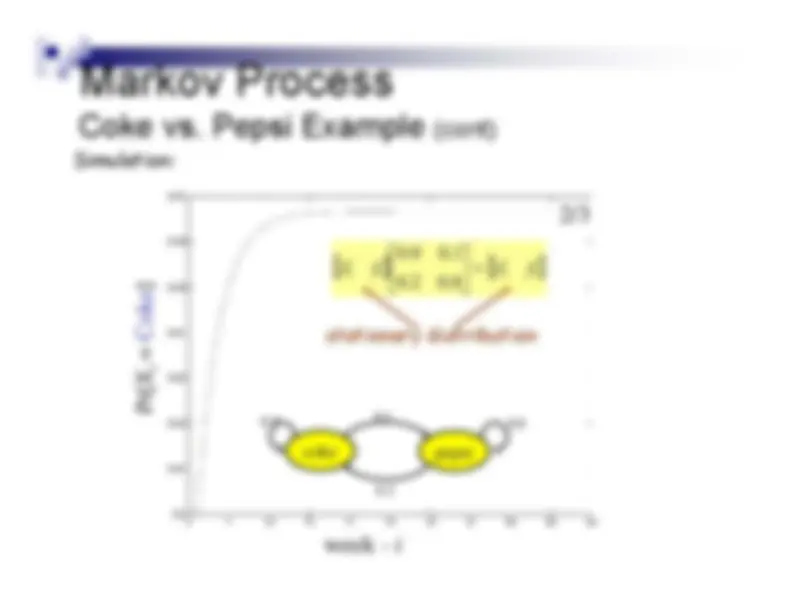

chance that his next cola purchase will also be Coke.• If a person’s last cola purchase was Pepsi, there isIf a person s last cola purchase was

eps , there s

an 80% chance that his next cola purchase will also bePepsi.

0.^

transition matrix:

coke^

pepsi