Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

Main points of Introduction to Digital Libraries are: Information Retrieval, Specific Information, Relevant Information, Common Modern Application, Search Engines, Technology, Fundamental, Conducted Pre-Internet, Document, Query

Typology: Slides

1 / 50

This page cannot be seen from the preview

Don't miss anything!

(^) Retrieving all the possibly relevant results for a given query.

(^) Creating indices of all the documents/data to enable faster searching/quering.

(^) Retrieval of a set of matching documents in decreasing order of estimated relevance to the query.

(^) Queries are specified as boolean expressions and only documents matching those criteria are returned. (^) e.g., apples AND bananas

(^) Both queries and documents are specified as lists of terms and mapped into an n - dimensional space (where n is the number of possible terms). The relevance then depends on the angle between the vectors.

(^) Altavista, Google, etc. (^) Vector models are not as efficient as boolean models.

(^) Removal of non-relevant results. (^) Filtering restricts the number of results to those that are probably relevant.

(^) Ordering of results according to calculated probability of relevance. (^) Ranking puts the most probably relevant results at the “top of the list”.

bananas bananas apples bananas bananas apples bananas apples apples Doc Doc (^) An inverted file for a term contains a list of document identifiers that correspond to that term. Doc1: 1 Doc2: 4 Doc1: 3 Doc2: 1 bananas 5 original apples 4 documents inverted files

Implementation of Inverted Files

(^) Each term can be a separate file, sorted by weight. (^) Terms, documents identifiers and weights can be stored in an indexed database.

(^) The MG system (part of Greenstone) uses index compression and claims 1/3 as much space as the original data.

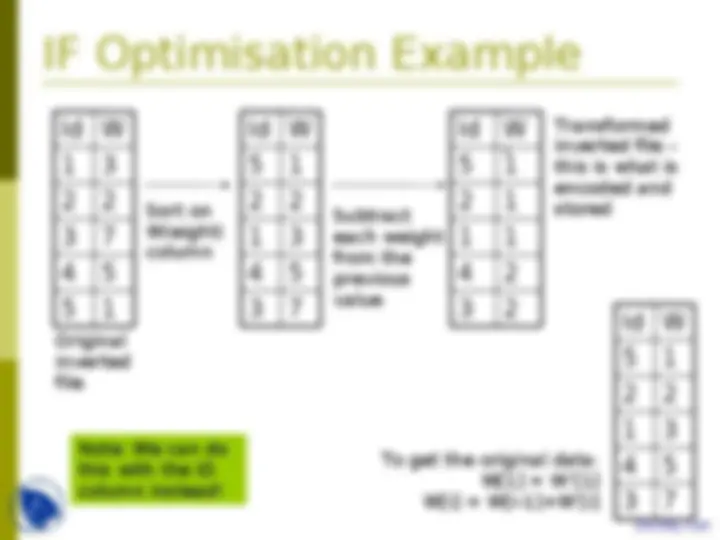

Id W 5 1

Id W 3 7

Id 3 2

Id W 3 7

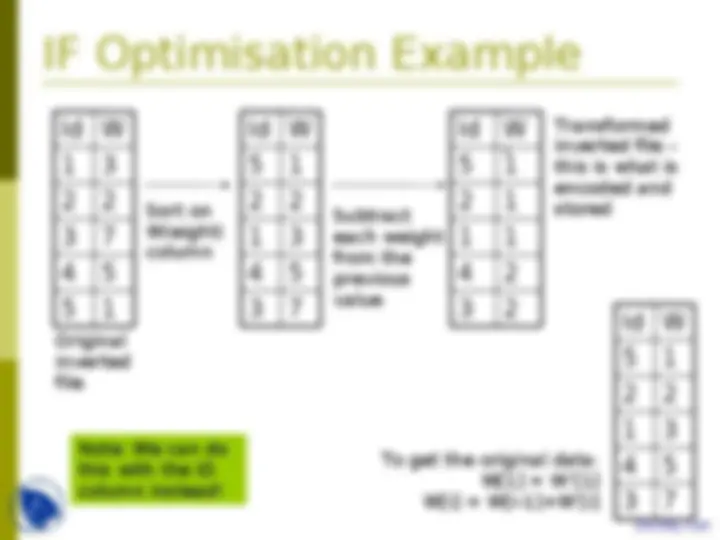

Original inverted file Sort on W(eight) column To get the original data: W[1] = W’[1] W[i] = W[i-1]+W’[i] Subtract each weight from the previous value Transformed inverted file – this is what is encoded and stored Note: We can do this with the ID column instead!

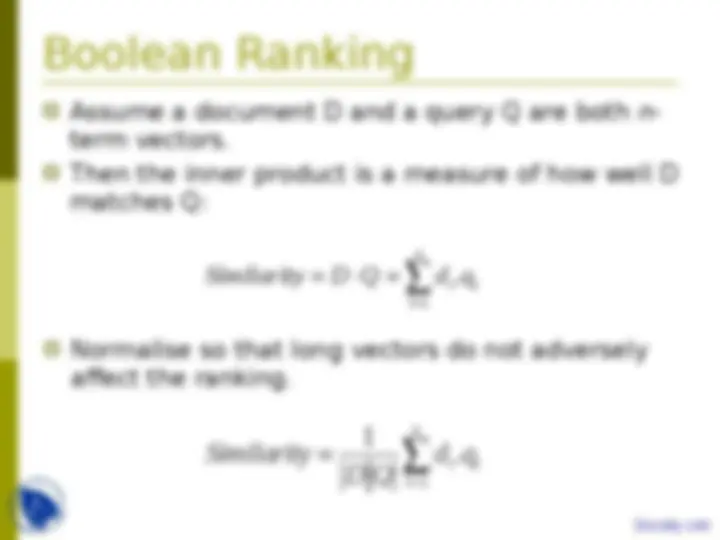

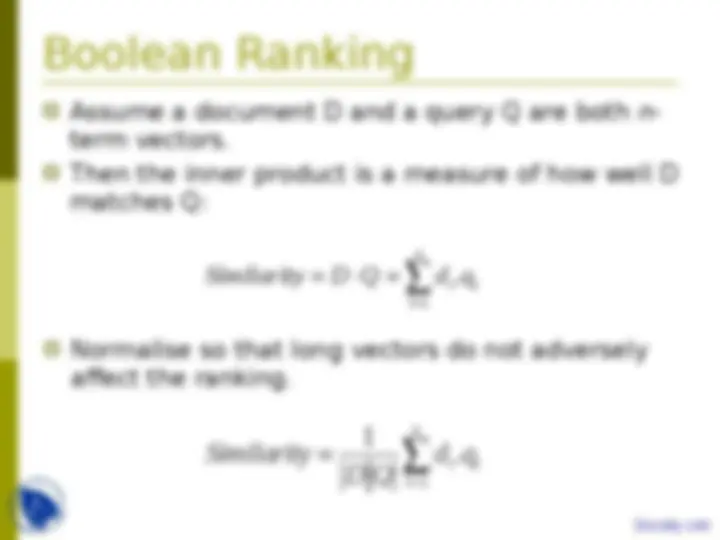

(^) Assume a document D and a query Q are both n - term vectors. (^) Then the inner product is a measure of how well D matches Q: ∑ =

n t t t

1

= n t t t d q D Q Similarity 1 . 1 (^) Normalise so that long vectors do not adversely affect the ranking.

(^) The number of occurrences of a term in a document – terms which occur more often in a document have higher tf.

(^) The number of documents a term occurs in – popular terms have a higher df.

(^) Common formulation: (^) Where f t is the number of documents term t occurs in (document frequency) and N is the total number of documents. (^) Many different formulae exist – all increase the importance of rare terms. (^) Now, weight the query in the ranking formula to include an IDF with the TF. w t =log e

1 N f t

t = 1 n

t

e

t

t

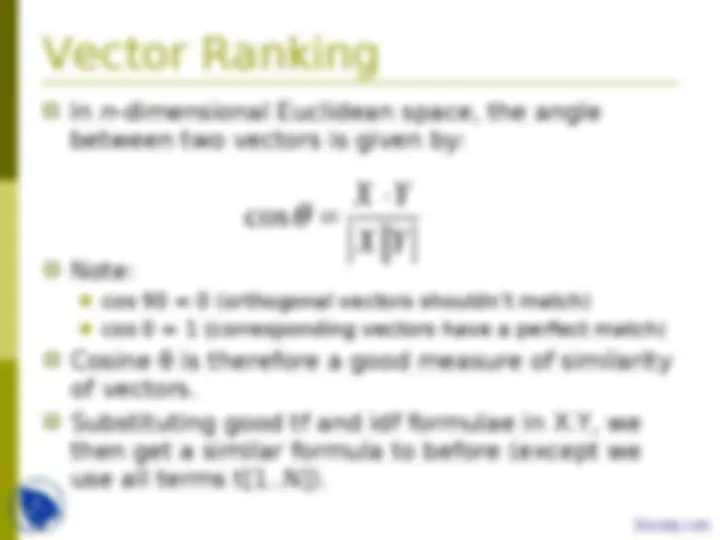

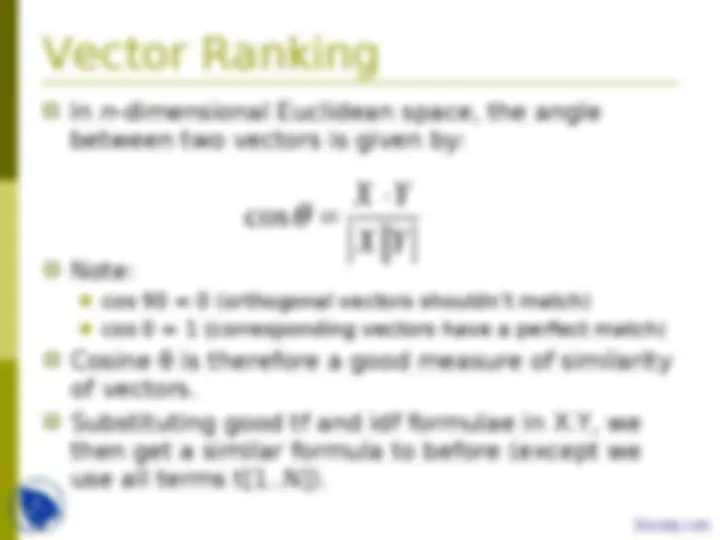

(^) In n -dimensional Euclidean space, the angle between two vectors is given by: X Y X ⋅ Y cos θ = (^) Note: (^) cos 90 = 0 (orthogonal vectors shouldn’t match) (^) cos 0 = 1 (corresponding vectors have a perfect match) (^) Cosine θ is therefore a good measure of similarity of vectors. (^) Substituting good tf and idf formulae in X.Y, we then get a similar formula to before (except we use all terms t[1..N]).

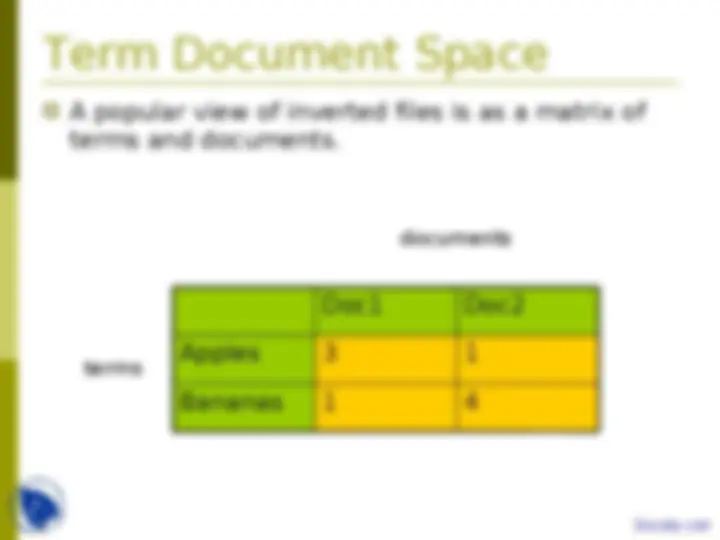

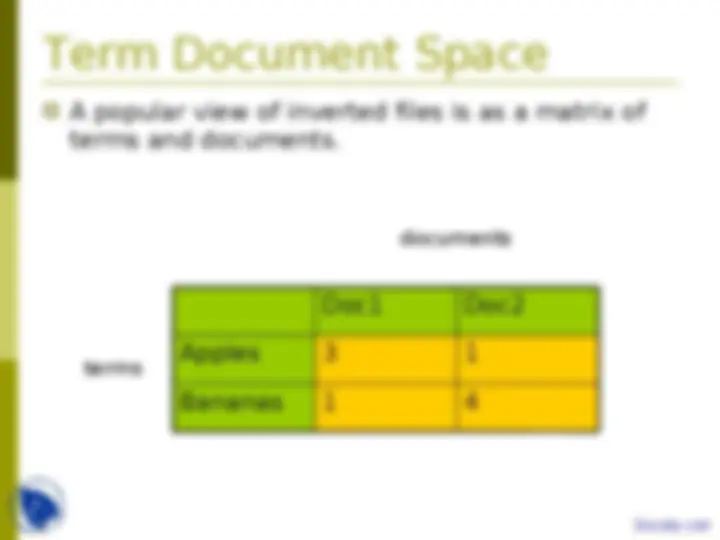

(^) A popular view of inverted files is as a matrix of terms and documents. Bananas 1 4 Apples 3 1 Doc1 Doc documents terms